Generation of Images using Generative Adversarial Networks for Augmentation of Training Data in Re-identification Models

State of the art

Abstract

Person re-identification is a technique used in the fields of artificial intelligence and machine learning to recognize a person in different images or videos, even if they have different angles, lighting, or clothing. This technique is used in applications such as security surveillance, digital image person identification, and video behavior analysis. It is based on the use of machine learning models that learn to recognize the characteristics that identify a person in different images or videos. These models are trained with a dataset that contains images or videos of people, along with information about the characteristics that identify each person. However, this can be challenging due to the variability of the characteristics that identify a person, such as clothing, hairstyle, and other aspects that can change their appearance. It can also be a challenge if there is a limited amount of training data due to privacy concerns of the people appearing in the images or videos. To overcome these challenges, pre-processing techniques and deep learning techniques can be used to allow the model to adapt to variations in a person's appearance and recognize relevant features in low-quality images or videos. Currently, the best training datasets are limited in size, such as Market1501 with 1501 people recorded with 6 different cameras or DukeMTMC-reID with 702 people in 8 different cameras. In this work, we investigate the use of a generative adversarial network, along with data augmentation techniques, to train a person re-identification model.

State of the art

In the proposed work, we will analyze the state of the art in the use of generative adversarial networks (GANs) for data augmentation to improve the performance of re-identification models.

The article "Generative Adversarial Networks" by Ian Goodfellow et al. from 2014 introduces a new class of neural networks called generative adversarial networks (GANs). These networks are a type of machine learning model used to generate synthetic images or videos from a given dataset. GANs are composed of two neural networks trained simultaneously, a generator and a discriminator. The generator network is responsible for generating synthetic images or videos, while the discriminator network evaluates the quality of the images or videos generated by the generator network. The objective of GANs is for the generator network to generate images or videos that are as realistic as possible, so that the discriminator network cannot distinguish between real and synthetic images or videos generated by the generator network. The article presents experiments that demonstrate the capacity of GANs to generate high-quality synthetic images or videos, and discusses the potential of these networks in applications such as 3D image generation, image or video quality enhancement, and behavior analysis in videos. Since this first article, new architectures have been developed that improve the quality of generated images, such as the architecture proposed in 2017, CycleGAN, which is capable of improving the quality of generated images by transferring the style or domain of one group of images to another by combining two generative adversarial networks. Due to the improved performance of generative adversarial networks, they are being used to augment training data, which enables performance improvement in machine learning models where limited databases exist. As shown in the chart, a significant increase in the study of the use of generative adversarial networks for data augmentation in re-identification models can be observed from 2018. The articles have been grouped into four categories corresponding to different methods used for generating new artificial images.

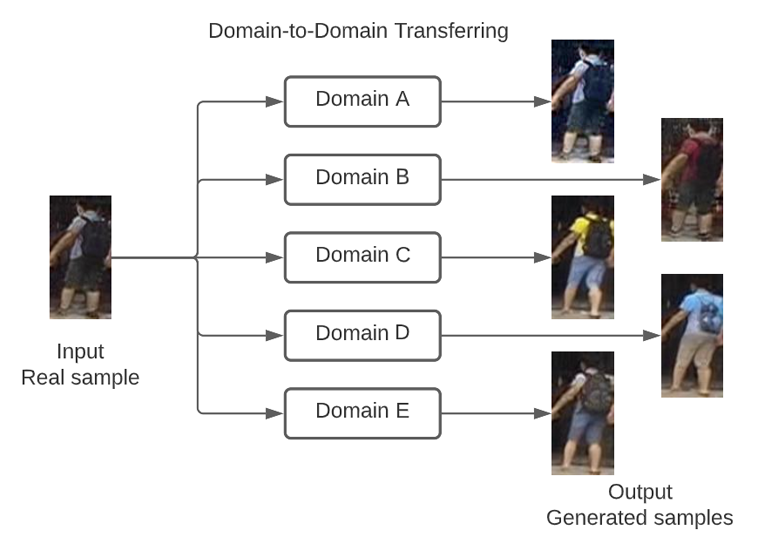

Domain-to-Domain transferring

Through a real input image, artificial images are generated based on different styles, or domains, with which the model was trained. This allows the transfer of the style from one dataset to another, for example, transferring the style from an art painting to a real photo. The generated images show a modification with respect to the input image such as color, tonality, lighting, etc., but there is no change in the structure of the image.

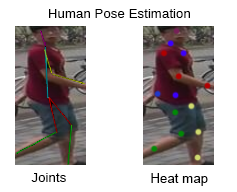

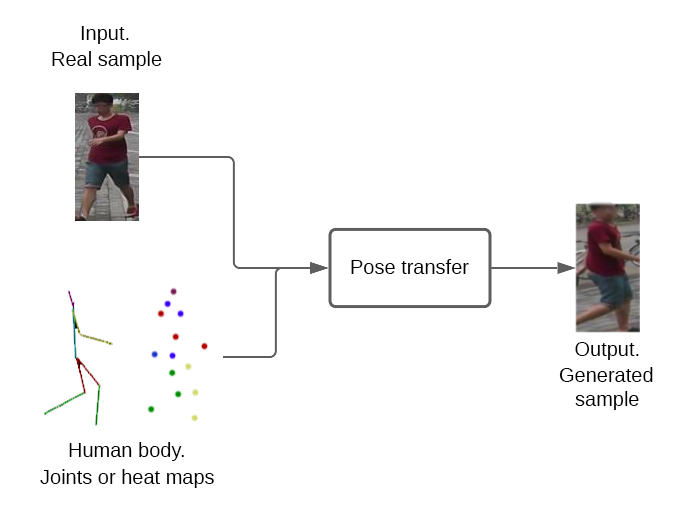

Pose modification

The aim of these GANs is for the generator to create images with different poses of people that are indistinguishable from real images in the training dataset. A real image of a person and a heat map or joint map corresponding to the skeleton of another pose are used as inputs. This enables an increase in the training dataset for a person re-identification model by adding images with different person poses.

Random artificial images

Random artificial images are generated and labeled with techniques to train the re-identification model.

State-of-the-art reviews

State-of-the-art reviews are used by researchers and professionals to gain an overview of a research field and to identify major trends and challenges in the area. They are also used as a basis for developing new research and projects in the field. In this case, we focus on articles on the state of the art in the specific topic of using generative adversarial networks to augment training data in re-identification models.

Chart

State-of-the-art chart of the articles that have emerged over time, divided into the four categories previously described. Each circle represents a year and each article is assigned a color corresponding to its category. Each article is clickable and redirects to the original one.

State-of-the-art reviews - Random artificial images - Domain-to-Domain transferring - Pose modification

color_reviews = "#3a2bff" color_articulaciones = "#fc9303" color_transfer = "#FF0000" color_random = "#008000"2021 2020 2019 2018 2017

The chart has been automatically generated by converting a .bib file into a format compatible with ChartJS. You can find the code at the following link.

BibTeX

Acknowledgements

We would like to express our gratitude to William Peebles for generously allowing us to use his project page template, which can be found at https://www.wpeebles.com/gangealing.

The language model CHATGPT was utilized to translate the primary text from Spanish to English.